Monocular Camera Localization in 3D LiDAR Maps

研究问题

使用单相机在3D雷达地图中进行定位。

Localizing a camera in a given map is essential for vision-based navigation.

GPS存在的问题:While GPS can provide accurate position estimates at a global scale, it suffers from substantial errors due to multipath effects in urban canyons and does not provide sufficiently accurate estimates indoors.

相关研究

- A popular approach to mobile robot localization is to match sensor data against a previously acquired map.Many existing methods use the same sensor type for mapping and localization

- However, the integration of both has mostly been done in the socalled back-end of the SLAM process and not by matching their data directly.

Lidars

estimating incremental movements

scan matching techniques based on variants of the Iterative Closest Point (ICP) algorithm

detect loop closures

feature-based approaches

estimate consistent trajectories and maps

Modern methods often rely on graph-based optimization techniques.

Advantage:

LiDARs provide accurate range information

mostly invariant to lighting conditions

Disadvantages:

expensive

heavy

Since LiDARs provide accurate range information and are mostly invariant to lighting conditions, such methods have the potential to create highly accurate maps of indoor and outdoor environments. Localizing a LiDAR within these maps can be achieved with pure scan matching or using filtering approaches.

Visual SLAM

using images as only source of information, In contrast to SfM, visual SLAM target real-time applications typically

MonoSLAM

first system achieving real-time performance

based on an extended Kalman filter which estimates the cameras pose, velocity and feature positions.

PTAM

parallelize camera tracking and map building in order to utilize expensive optimization techniques without forfeiting real-time performance.

Advantage:

low-cost, lightweight, and widely available

Disadvantages:

do not directly provide range information.

visual localization

The majority of research concerning visual localization has focused on matching photometric characteristics of the environment.

- comparing image feature descriptors like SIFT [12] or SURF [2]

- directly operating on image intensity values

main issues:

environment’s photometric appearance changes substantially over time, especially across seasons.

approach:

storing multiple image sequences for the same place from different times

- matching trajectories using a similarity matrix.

camera localization in geometric maps built from LiDAR data

Wolcott et al: localize an autonomous vehicle in urban environments.

Using LiDAR intensity values, they render a synthetic view of the mapped ground plane and match it against the camera image by maximizing normalized mutual information, only provides the 3-DoF pose.

Pascoe et al.: Direct visual localisation and calibration for road vehicles in changing city environments

Their appearance prior (map) combines geometric and photometric data and is used to render a view that is then matched against the live image by minimizing the normalized information distance. estimates the full 6-DoF camera pose.

Both approaches perform matching in 2D space and therefore require expensive image rendering supported by GPU hardware. Furthermore, their prior comprises LiDAR intensities or visual texture respectively.

解决方案

主要贡献,方法特色,主要假设

Inputs:

an image stream and a map that is represented as a point cloud

Output:

a 6-DoF camera pose estimate at frame rate.

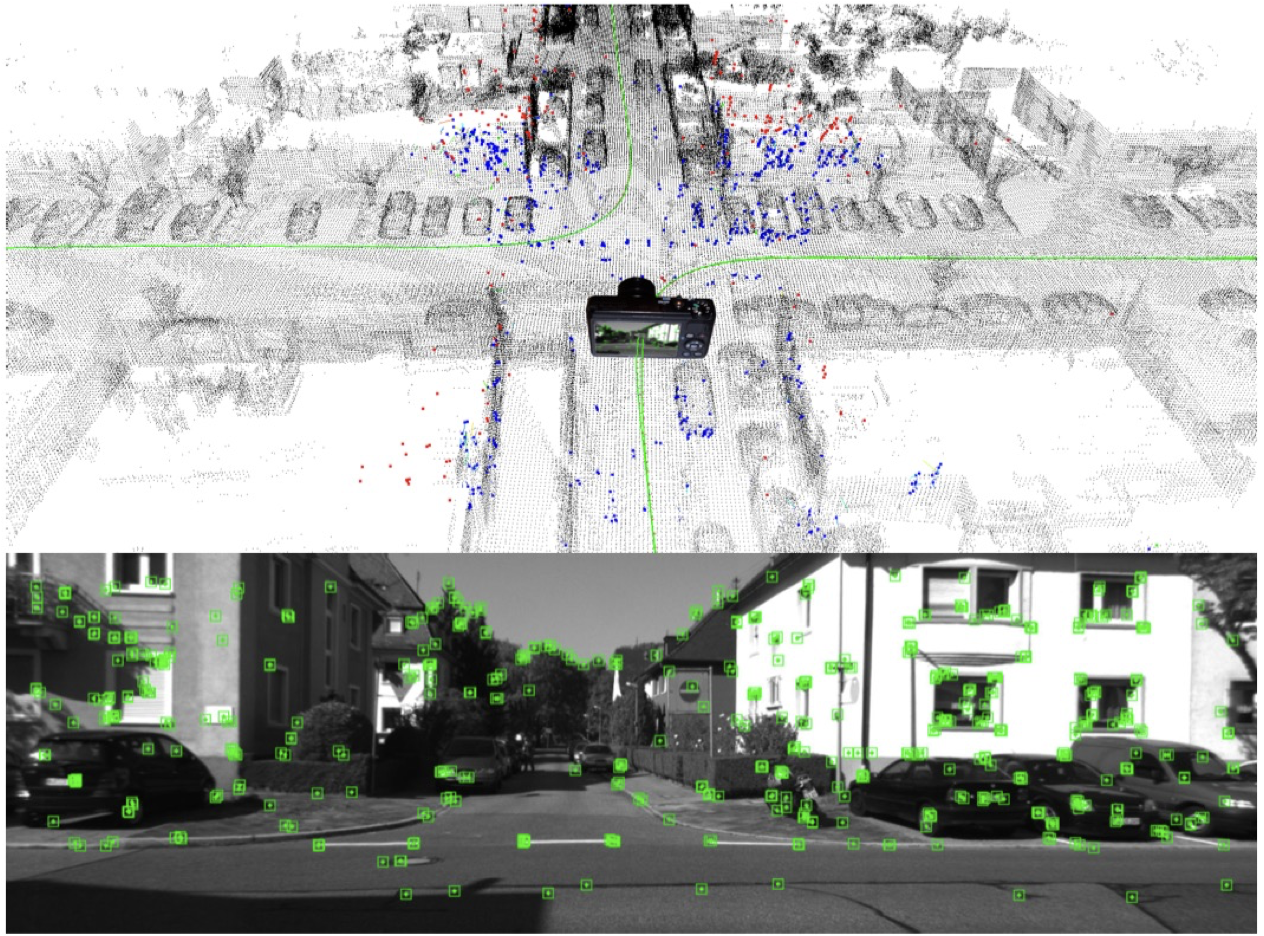

Our approach builds on a visual odometry system that uses local bundle adjustment to reconstruct camera poses and a sparse set of 3D points from image features. Given the camera poses relative to these points, we indirectly localize the camera by aligning the reconstructed points with the map.

The alignment is performed once the visual odometry, which is based on components of ORB-SLAM, provides the first local reconstruction consisting of two keyframe poses and a minimal amount of 3D points.

Since our approach is not intended for global localization, a coarse estimate for the transformation between this initial reconstruction and the map is required. Two poses are necessary to infer the initial scale.

方法

The visual odometry system reconstructs keyframe poses and 3D points by solving a local bundle adjustment problem.

Our method exploits the advantages of both sensors by using a LiDAR for mapping and a camera for localization.

we propose a novel approach, which tracks the pose of monocular camera with respect to a given 3D LiDAR map.

We employ a visual odometry system based on local bundle adjustment to reconstruct a sparse set of 3D points from image features. These points are continuously matched against the map to track the camera pose in an online fashion.

The key idea of our work is to approach visual localization by matching geometry.

In this paper we present a method to track the 6-DoF pose of a monocular camera in a 3D LiDAR map

Our method employs visual odometry to track the camera motion and to reconstruct a sparse set of 3D points via local bundle adjustment.For this purpose we rely on components of ORB-SLAM presented by Mur-Artal et al.

Our approach builds on a visual odometry system that uses local bundle adjustment to reconstruct camera poses and a sparse set of 3D points from image features. Given the camera poses relative to these points, we indirectly localize the camera by aligning the reconstructed points with the map.

- Local Reconstruction

hat we use a consistent local reconstructio

- Data Association

- Alignment

特点

- Since it only relies on matching geometry, it is robust to changes in the photometric appearance of the environment

- Utilizing panoramic LiDAR maps additionally provides viewpoint invariance.

- Yet low-cost and lightweight camera sensors are used for tracking.

主要贡献

The main contribution of this work is to continuously align this point set with the given map by matching their geometry and applying an estimated similarity transformation to indirectly localize the camera.

结论

visual odometry to track the 6-DoF camera pose

reconstruct a sparse set of 3D points via bundle adjustment

align the reconstructed points with the map by continuously applying an estimated similarity transformation to indirectly localize the camera.

Advantage:

- We argue that our approach is advantageous because the geometry of the environment tends to be more stable than its photometric appearance which can change tremendously even over short periods.

- Our method exploits the advantages of both sensors by using a LiDAR for mapping and a camera for localization.Our method enables people to accurately localize themselves in these maps without being equipped with a LiDAR.

Disadvantages

- Since our approach is not intended for global localization, a coarse estimate of the initial pose in this map is required.We employ a visual odometry system based on local bundle adjustment to reconstruct the camera poses relative to a sparse set of 3D points.